We introduce a novel sketch-to-image tool that aligns with the iterative refinement process of artists. Our tool lets users sketch blocking strokes to coarsely represent the placement and form of objects and detail strokes to refine their shape and silhouettes. We develop a two-pass algorithm for generating high-fidelity images from such sketches at any point in the iterative process. In the first pass we use a ControlNet to generate an image that strictly follows all the strokes (blocking and detail) and in the second pass we add variation by renoising regions surrounding blocking strokes.

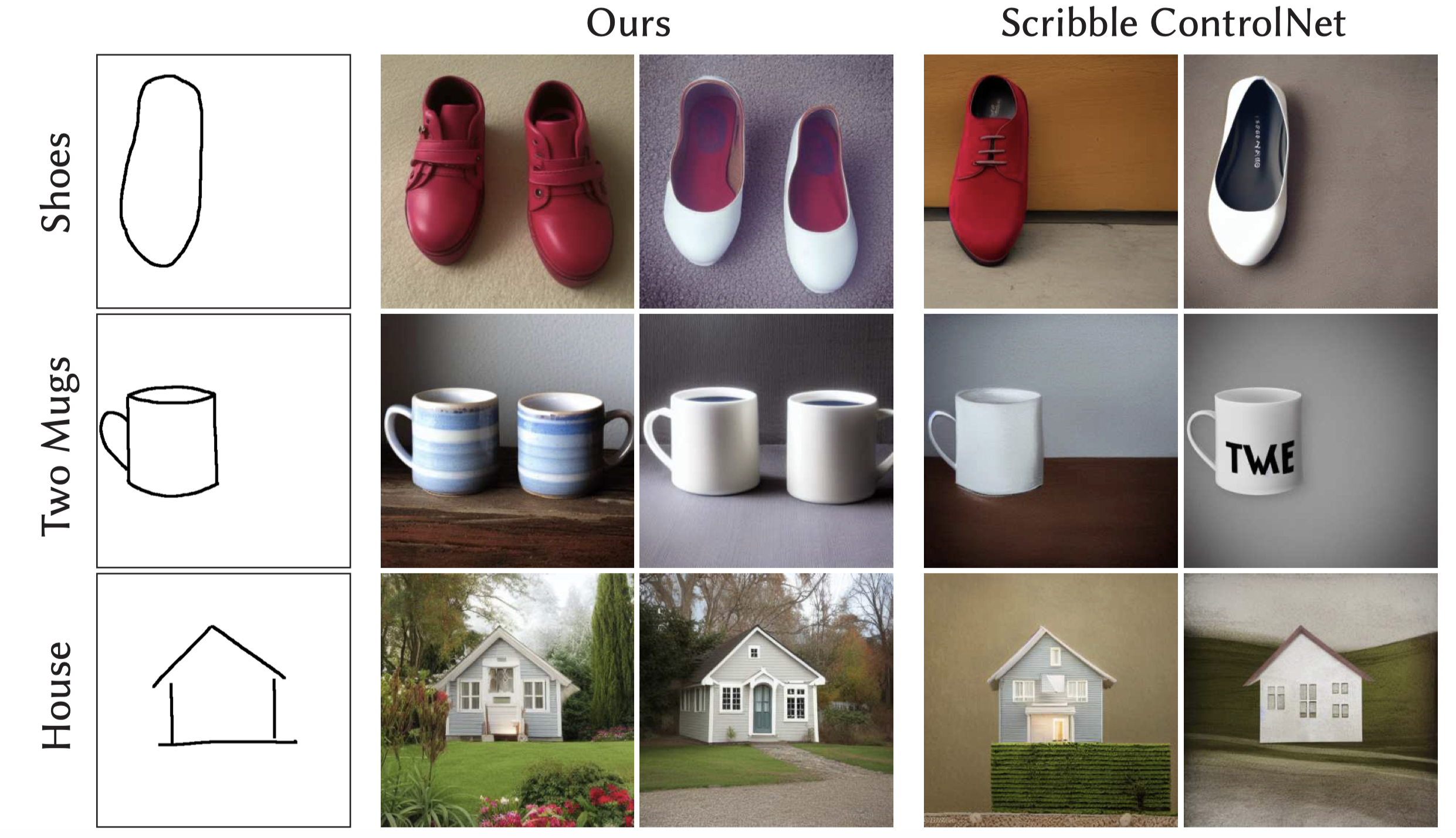

We also present a dataset generation scheme that, when used to train a ControlNet architecture, allows regions that do not contain strokes to be interpreted as not-yet-specified regions rather than empty space. We show that this partial-sketch-aware ControlNet can generate coherent elements from partial sketches that only contain a small number of strokes. The high-fidelity images produced by our approach serve as scaffolds that can help the user adjust the shape and proportions of objects or add additional elements to the composition. We demonstrate the effectiveness of our approach with a variety of examples and evaluative comparisons. Quantitatively, evaluative user feedback indicates that novice viewers prefer the quality of images from our algorithm over a baseline Scribble ControlNet for 84% of the pairs and found our images had less distortion in 81% of the pairs.

In early stages of sketching, artists often specify object forms via rough blocking strokes. Standard ControlNet adheres too strictly to these strokes, creating images with object forms that are unrealistic or poorly proportioned (misshaped cat, overly circular flower, poorly proportioned scooter, simplified cupcake silhouette). Instead, our algorithm treats blocking strokes as rough guidelines for object form, enabling artists to generate visual inspiration that is both realistic and accurately matches their intent.

We present walkthroughs of our system being used to generate a variety of images. Each figure shows a sequence of sketching steps and the generated image samples for each sketch. The supplemental videos also demonstrate how the generated samples scaffold the process. The walkthroughs illustrate the benefits of blocking, partial-sketch completion, and detailed sketch control for generating images that scaffold the iterative sketch-to-image process. Please refer to the paper for walkthrough details.

Our algorithm takes as input a text prompt (“a baseball photorealistic”), and a sketch consisting of blocking strokes (green) and detail strokes (black). In a first pass it feeds all strokes to a partial-sketch-aware ControlNet to produce an image, denoted as 𝐼𝑡𝑐 , that tightly adheres to all strokes. Here the contour of the baseball in 𝐼𝑡𝑐 is misshapen because the input blocking strokes (green) are not quite circular. To generate variation in areas surrounding the blocking strokes, our algorithm applies a second diffusion pass we call Blended Renoising. Based on a renoising mask formed by dilating the input strokes, blended renoising generates variation in the area surrounding blocking strokes while preserving areas near detail strokes. The renoised output image corrects the baseball's contour, while the location of the stitching closely follows the user's detailed strokes.

Dilation radius is more interpretable as a parameter for loosening spatial control than ControlNet strength. As the dilation radius of blocking strokes 𝜎𝑏 increases, our algorithm maintains the rough shape of the vase while gradually allowing greater variation in the exact shape of its contour. In contrast, varying the ControlNet guidance strength without dilating the stokes leads to sudden unpredictable behavior as the strength is decreased. We lose adherence to the strokes as the hands appear in the third image and the vase completely loses its form by the fourth image.

Synthetic Data Generation. ordering strokes by their distance from the boundary of the foreground object's mask (gray tint) enables the preferential deletion of strokes furthest from the object boundary. For the car, the first 20% of the lines capture a portion of the silhouette. This enables us to train a partial-sketch ControlNet that auto-completes object forms and also attempts to fill in details.

Our Partial-Sketch-Aware ControlNet vs Scribble ControlNet. Our Partial-Sketch-Aware ControlNet generates additional elements beyond the drawn strokes: generating multiple shoes instead of a single shoe for "shoes", generating a second mug for "two mugs", and generating a house with interesting foreground and background details from a simple outline for "house". The standard Scribble ControlNet fails to generate additional elements and produces minimal details in both the foreground and background of the house.

Support for this project was provided by Meta, Activision, Andreessen Horowitz and the Brown Institute for Media Innovation. Thank you to Joon Park, Purvi Goel, James Hong, Sarah Jobalia, Yingke Wang and Sofia Wyetzner for their feedback on our tool.

@inproceedings{10.1145/3654777.3676444,

author = {Sarukkai, Vishnu and Yuan, Lu and Tang, Mia and Agrawala, Maneesh and Fatahalian, Kayvon},

title = {Block and Detail: Scaffolding Sketch-to-Image Generation},

year = {2024},

isbn = {9798400706288},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3654777.3676444},

doi = {10.1145/3654777.3676444},

articleno = {33},

numpages = {13},

location = {Pittsburgh, PA, USA},

series = {UIST '24}

}